National Data Protection Authorities need to pave the way for private Covid-19 data sharing between companies and governments. This article proposes a rapid GDPR anonymization certification so that companies can quickly use existing commercial anonymization technologies for sharing Covid-19 data without legal risk.

Data is a key tool in managing the Covid-19 pandemic. Decision makers need data to understand the effect of open-society policies on the spread of the virus. In weighing privacy against public and economic health, most people will agree that during the crisis, health takes priority.

The EFF gives the following principles for Covid-19 data sharing:

- Privacy intrusions must be necessary and proportionate.

- Data collection based on science, not bias.

- Expiration.

- Transparency.

- Due Process.

One way to avoid unnecessary privacy intrusions is through data pseudonymization and anonymization. If the scientific or policy goal can be met with anonymized data, then the data should be anonymized. Unfortunately, most companies have no experience with anonymization, and those that do usually use simple traditional techniques like aggregation, which substantially reduce the value of the data, making it unable to meet science and policy goals. As a result companies may instead rely on pseudonymized data which, by GDPR standards, is personal data.

The last few years has produced a wave of innovation in anonymization by startups. Aircloak offers a solution for descriptive analytics use cases. Companies like Statice and mostly.ai generate synthetic data using machine learning models. Privitar and Leapyear and others offer products based on Differential Privacy. These companies and others can offer data anonymization solutions that go far beyond simple aggregated data.

Companies wishing to share data with government organizations may worry that the products offered by privacy startups do not meet the GDPR standards for anonymization, and may be reluctant to take advantage of recent innovations. Getting approval from national Data Protection Authorities (DPA) in advance of sharing data is not a viable option. DPAs generally evaluate technologies on a use-case by use-case basis. Each DPA has its own procedure and policies, and the evaluations can take many months.

What is needed is a comprehensive fast-track EU-wide certification for anonymization technologies when used for sharing Covid-19 data.

Following the spirit of the EFF principles, the key points of such a certification program could be:

- Establish numerical thresholds for anonymization using the GDA Score from the Open GDA Score Project. The GDA Score is based on the three anonymization criteria from Article 29 Data Protection Working Party Opinion on Anonymization Techniques.

-

Establish a rapid-evaluation team composed of national DPAs and academics.

- Makes rapid provisional approvals or rejections (2-3 days).

- Makes subsequent more thorough evaluation

-

Open public evaluation to invalidate the rapid evaluation.

- Proposers of anonymization technology openly publish descriptions.

- Proposers enable technical evaluation by providing samples of anonymized data or by providing access to the technology.

- Data shared with approved technologies is given legal status of non-personal.

The following expands on these key points.

Technical Criteria

The GDA Score is a method for assigning numerical scores to anonymization mechanisms. The scores rate the anonymization properties of the mechanisms with respect to specific attacks. The scores are generated by running attacks on the mechanisms and measuring the relative success of the attacks. In the attack, the attacker makes claims about user data. For instance, a singling-out claim might be “There is one user that has gender female and zip code 12345 and birth date 1992-02-15.”

Because the GDA Score is generated empirically based on specific attacks, it can be the case that a given anonymization mechanism is weaker than the score would indicate. This can happen when there are unknown attacks. To minimize the likelihood of this, it is important to have open public evaluation.

The GDA Score is in fact a set of scores for different aspects of anonymity. To keep things as simple as possible, the technical criteria for this proposal is based on two of the aspects, Confidence Improvement and Claim Probability.

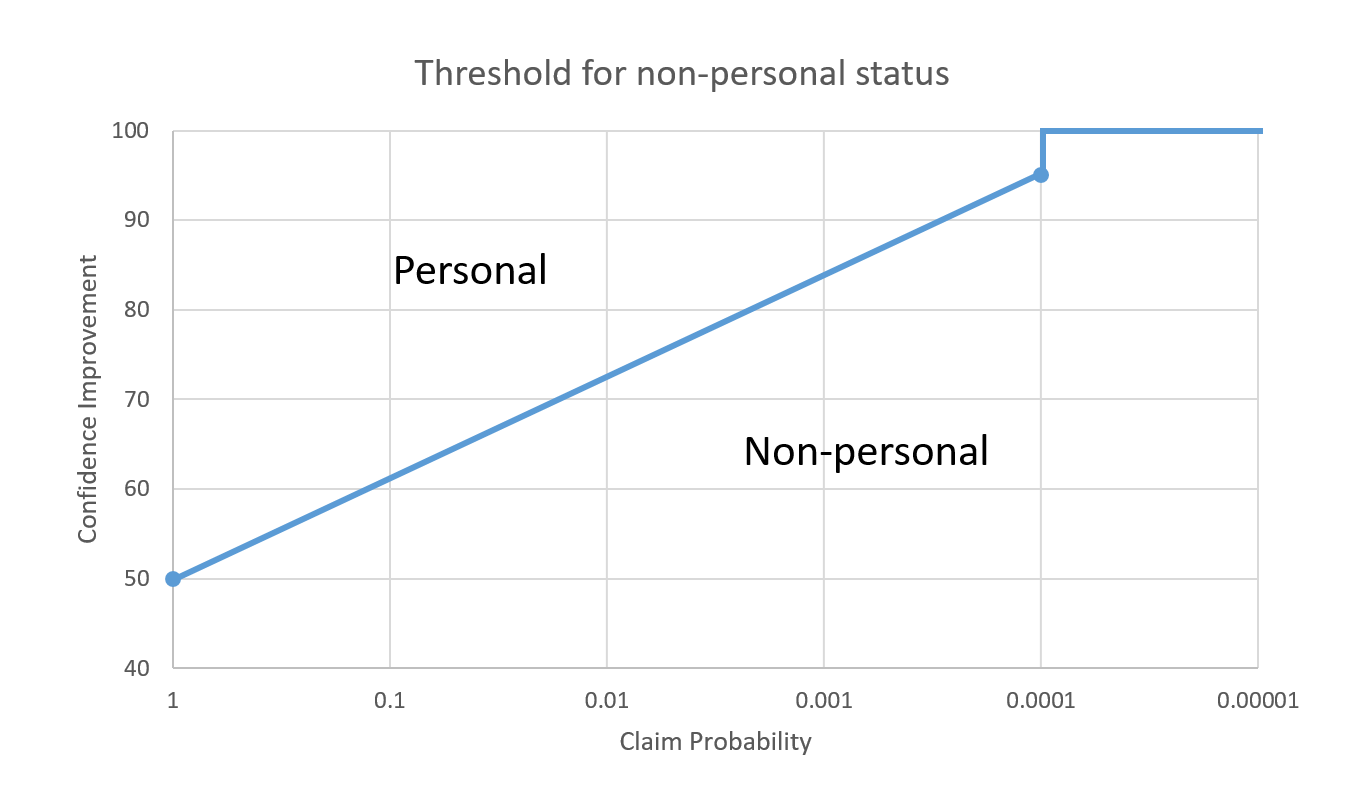

Confidence Improvement is a measure of how often an attacker’s claims are correct. Claim probability is a measure of how likely it is that an attacker can make a claim. An anonymization mechanism can be considered strong if either confidence improvement is very low or claim probability is very low. For instance, if an attacker can make claims about all of the users, but only gets a 20% confidence improvement, then anonymity is strong. Likewise if an attacker can make high confidence claims (say 95% confidence improvement), but can only make them against 1/10000 users, then anonymity is strong.

The following chart is a proposed numeric threshold for anonymity:

If an anonymization mechanism has no attack with Confidence Improvement and Claim Probability scores above the line, the data rendered anonymous with the mechanism is regarded as non-personal.

It is assumed that the attacker may have Prior Knowledge that assists in the attack. The GDA Score models prior knowledge as allowing the attacker to know some of the data.

This document gives a detailed example of how to establish Confidence Improvement and Claim Probability scores. (This comes from the Diffix Cedar 2020 bounty program, but the procedure for this proposal would be similar.)

Evaluation

The evaluation needs to find a balance between quickly providing critical data to governments and government-approved researchers, and protecting individual privacy. This proposal therefore suggests a three-tiered approach:

- Rapid (2-3 day) evaluation by the rapid evaluation team composed of national DPAs and academics for provisional approval.

- Optional more thorough (2-3 week) evaluation by the rapid evaluation team for final approval.

- Open public oversight that can lead to withdrawal of approval.

In all phases, there is a presumption of anonymity unless shown otherwise. In other words, if it cannot be shown that the non-personal threshold is not met, then the mechanism is presumed to generate non-personal data.

The evaluation team does not need to literally run an attack to demonstrate failure to meet the threshold. It is sufficient for the evaluation team to describe the attack. If the proposer of the anonymization technology wishes to dispute the conclusion of the evaluation team and cannot do so with written arguments, then the onus is on the proposer to implement the attack and demonstrate that it does not work.

The proposer must provide the following documentation:

- A complete description of the anonymization mechanism

- A document describing why the non-personal threshold is met

The first phase should include at least three evaluators. The expectation is that each evaluator will spend one to two hours. If a vulnerability cannot be found in that timeframe, provisional approval is given.

The second and third phases take place simultaneously. In other words, immediately upon approval from the first phase, the proposer documentation is made public. In addition to the documentation, the proposer must provide:

- Examples of pre-anonymized and post-anonymized data set (if the anonymization mechanism is static), or

- Access to the anonymization software and a pre-anonymized data set on which the software runs (if the anonymization mechanism is dynamic).

The second phase is optional, and need only take place if the evaluation team believes that there may be a vulnerability and requires more time to evaluate. This evaluation may be done by a single evaluator. If no additional evaluation is deemed necessary, then final approval may be given without further evaluation.

Members of the public may at any time notify the evaluation team and the proposer of alleged vulnerabilities. If it is not clear to the evaluation team that the vulnerability is real, then it is up to the member of the public to demonstrate the attack.

Next Steps

It is important that national and EU DPAs can move quickly to enable the use of new anonymization technologies. We hope that this proposal is a step in that direction. Please write to francis@mpi-sws.org and share your thoughts.