In theory, many anonymization methods may work well. In practice, anonymized results are usually either usable or strongly anonymized, but not both. Data scientists have been struggling for years with the tradeoff between data quality and privacy.

To make things worse, there are currently no common certifications or testing methods for anonymization solutions that define whether the anonymization carried out complies with the GDPR or not.

Prof. Paul Francis has been researching data anonymization for several years and recently addressed this complex issue. The result is the General Data Anonymity Score – a new approach with which common anonymization methods can be tested and compared.

Paul, can you tell us a little about yourself?

I’m a researcher at the Max Planck Institute for Software Systems in Kaiserslautern and Co-Founder of the startup Aircloak. My research focuses on data anonymity. I’m a relative newcomer to this area of research, having in the past done research on Internet technologies. I think the fact that I’m a newcomer allows me to bring new thinking to the problem. In particular, working with Aircloak as a research partner really helps me to stay grounded in developing practical solutions.

You came up with the “GDA-Score”, what is the idea behind it?

Unfortunately, there are no precise guidelines on how to determine if data is anonymous or not.

EU states are supposed to develop certification programs for anonymization, but none have done it so far and no one is really sure how to do it. National Data Protection Authorities (DPAs) are from time to time asked to approve some narrowly-scoped data anonymization like a particular dataset for a particular use case, but the process so far has been ad hoc. The French national DPA, CNIL, which in my opinion is one of if not the best national DPA, offers opinions on anonymity. These opinions, however, do not state that a given scheme is anonymous. Rather, they only go so far as to state that they do not find a problem with a given scheme. This stems from the sensible recognition that claiming anonymity with certainty is very difficult.

As we developed Aircloak in the last five years, we had to find ways to build confidence in our anonymization properties, both for ourselves and others. One really useful approach is our bounty program on anonymity, where we pay attackers who can demonstrate how to compromise anonymity in our system. The program was successful in that some attacks were found, and in the process of designing defensive measures, our solution has improved.

In order to run the bounty program, we naturally needed a measure of anonymity so that we could decide how much to pay attackers. We designed it based on how much confidence an attacker has in a prediction of an individual’s data values, among other things. At some point, we realized that our measure applies not just to attacks on Aircloak, but to any anonymization method like pseudonymization, data masking, K-anonymization, differential privacy and other variants. We also realized that our measure might be useful in the design of certification programs for anonymity.

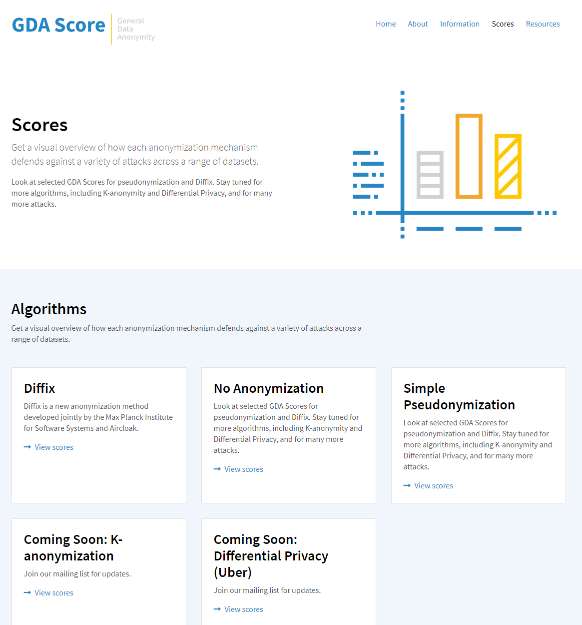

The General Data Anonymity Score is an open project where everyone is invited to contribute – https://www.gda-score.org/

How does the scoring work?

On our website www.gda-score.org we provide various resources such as an attack library, tools for measuring the results and sample databases. These allow anyone who wants to conduct their own experiments with a particular algorithm and to compare the results in terms of utility and privacy.

The core concept behind the GDA Score is that of explicitly querying the de‐identified data, and basing the defense and utility measures on the responses. The current criteria for measuring defense are those of the EU Article 29 Data Protection Working Party Opinion on Anonymization: singling‐out, linkability, and inference. This might be extended over time, but for now these are the only criteria.

With this approach, any de‐identification scheme can be measured in the same way independent of the underlying technology. This is the primary strength of the GDA Score: that it works with any de‐identification technology and therefore allows an apples‐to‐apples comparison of different approaches. There is, however, also a weakness: the score is only as good as the best attack. If there are unknown attacks, the GDA Score won’t reflect this. That is why we are making the GDA Score an open project. If many different parties contribute attacks, we’ll eventually work towards a near-complete set.

Who will benefit the most from that score?

I think the biggest benefit will go to companies that use anonymization technologies, and of course the individuals whose privacy is protected. As far as anonymization vendors go, I think the GDA Score will help those that have good technologies, and hurt those who are over-stating the strength of their anonymity. As one of the developers of Aircloak Insights, I think the GDA Score will help people realize the strength of our technology.

Who is testing the specific anonymization methods?

At the moment it is just my group at MPI-SWS, but my hope is that other organizations will contribute. In particular, from time-to-time researchers develop new attacks, but usually nothing more than publishing a paper comes out of it. If the researchers contribute the attacks to the GDA Score project, using the tools we developed, then others can benefit directly from those attacks, for instance by trying them on their own datasets or anonymization techniques.

Thanks Paul, we are looking forward to the further development!

Categorised in: Aircloak Attack Challenge, Anonymization, GDPR, General

Anonymisation CNIL Data Utility DPA GDA General Data Anonymity Score Privacy