While theoretical examples and academic approaches to data anonymization are plentiful, it is hard to find information on actual current use cases. To fill this gap, we are constantly collecting the best examples and present these here.

At the end of this article we also name tools and vendors that claim to solve some of the respective use cases. Note we cannot test all tools. Where specific vendors are mentioned, this is done only to make your further research easier and not to be misunderstood as a recommendation on our part.

1. Data Monetization

2. Partnering

3. Reporting to 3rd Parties

4. Open Data / Open Government Data

5. Privacy-preserving Machine Learning

6. Reporting Dashboards

7. Data Retention

8. Creation of Test Data Sets

9. Data Streaming

(10.) Where Anonymization Isn’t Possible

Data Monetization

Challenge

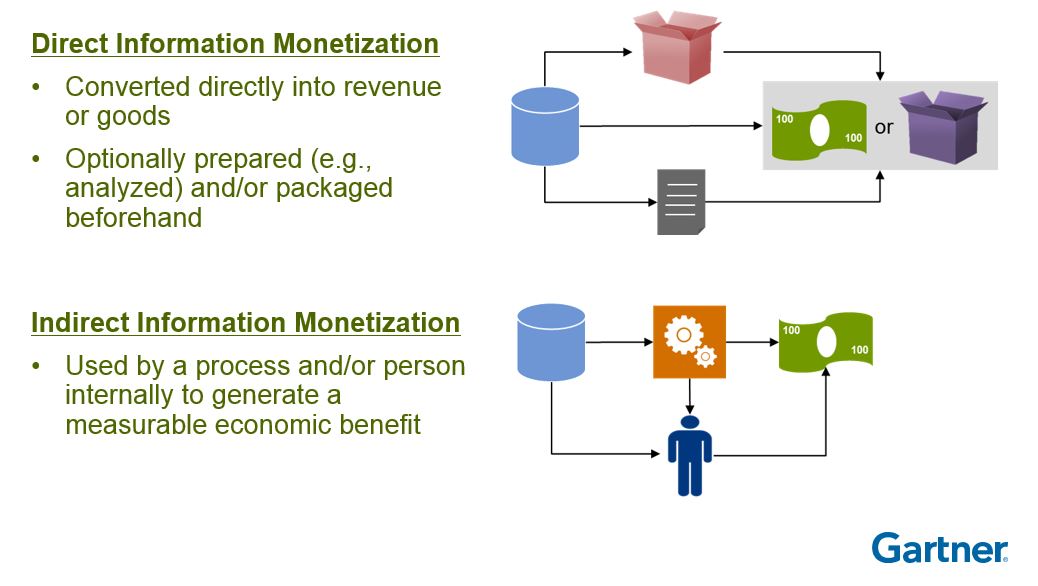

The research companies BARC and Gartner define Data Monetization quite broadly. The BARC study “Data Monetization – Use Cases, Implementation, Added Value” published in May 2019 still shows data monetization at an early stage, but with constantly increasing relevance. Gartner distinguishes between “direct” and “indirect” monetization.

In both cases, one of the biggest hurdles to implementing data monetization projects, is data security concerns. Anonymizing the data can be a good way to address such concerns!

Examples

Direct Monetization

Once fully anonymized, a dataset can be analysed or sold to third parties without needing additional consent from the customers. Also, more and more platforms and marketplaces are being established where (anonymized) data can be traded, such as Dawex.

Indirect Monetization

One of our customers, a large German bank, uses anonymous data for improved customer information, which is then used in product development and marketing. We have described this use case in more detail on our website.

Approaches To Consider

- Static Data Anonymization

- Dynamic Data Anonymization

Partnering

Challenge

Data about individuals is already streaming in from many different sources. Only in the rarest cases does a company have access to the many different contact points that a customer goes through in the course of his customer journey. Partnerships are therefore an ideal way of breaking the current data monopolies of GAFA.

A ‘customer’ journey map in healthcare. Data can come from diagnostics, lifestyle sensors, labs, electronic records, clinical trial data, genomic data, physicians and patients themselves. [Courtesy https://www.macadamian.com/]

Examples

Partnerships where data is shared can look very different. At the Hackathon Urban Data, which took place in Berlin in 2019, various residential construction and public transportation companies provided their data. The participants were then able to extract anonymized results from the aggregate data. The winning team designed an algorithm that makes more effective use of free parking spaces and helps to improve the quality of life of city dwellers.

Some other real-life examples are more controversial. A recent article in the Belgian L’Echo reports on a “mega-alliance” of media and telecommunications companies that joined forces to exchange data about users so that more and better targeted advertising can be placed. The media response has been critical so far and the project is under investigation by the Belgian data protection authority.

Another case has recently occurred in the USA, where the University of Chicago Medical Center is partnering with Google to share patient data. Both are now accused of sharing hundreds of patient records without removing any identifiable date stamps or medical notes.

The examples show that in these kind of projects a high degree of transparency is required. In what form will the data be collected, shared and made anonymous?

Approaches To Consider

- Dynamic Data Anonymization

Reporting to 3rd Parties

Challenge

Reporting from anonymized sensitive datasets may be relevant for compliance reasons. The demands for such anonymization should be evaluated on a case-by-case basis. Relevant factors are, among others, what information the third party already holds (less is easier), how quickly the dataset is changing (quicker is easier) and how interactive the report should be (less is easier).

Examples

Hospitals are often required to report up-to-date statistics to local or national government institutions. Such reporting must not contain personal information where it can be avoided. A dynamic anonymization layer can be a quick way of enabling such reports in high quality and tailored to the exact questions of the receiving institution.

Approaches To Consider

- Static Anonymization

- Dynamic Anonymization

Open Data / Open Government Data

Challenge

Companies are not alone in possessing valuable data. Universities, research institutes, and public authorities also have access to very interesting information that could be made available to the general public and be used for the greater good.

The key question is, how can we maximize public access to and value from open granular information while protecting privacy? As early as 2017, we at Aircloak worked together with the German think tank ‘Stiftung Neue Verantwortung’ on a guideline for data protection on Open Data (in German), that recommends a series of measures for working with open data and anonymization solutions.

Examples

Like all good science fiction, the movie “Minority Report” was based on real-world developments that have now found their conclusion in Predictive Policing. The biggest vendor in this field is Predpol, which works with single profiles and bases its predictions on social networks and crime records. Their competitor Hunchlab, on the other hand, shows how fully anonymous, open data can be used to fight crime without having to resort to surveillance.

Apart from the criminal justice system, open data can be used to improve the health and safety of citizens, transportation infrastructure, quality of education and equality and stability of the country’s housing market, among other uses. The Briefing Paper on Open Data and Privacy explains the benefits of open microdata in much more detail than we could in this blog and it’s definitely worth a read. The paper also names privacy as the key issue and goes more into detail about the advantages and drawbacks of anonymization with this particular use case.

Another popular example where public data is going to be anonymized with current technology is the upcoming US Census 2020. The Bureau is planning to use differential privacy (you can find more on the pros and cons on differential privacy here), but not without facing serious criticism. Many experts consider the use of differential privacy to be excessive, as this would significantly impair the informative value and usability of the data.

Approaches To Consider

- Static Anonymization

- Dynamic Anonymization

Privacy-preserving Machine Learning

Challenge

Voice assistants, advertising, auto-complete, Machine Learning powers many of the products we use today. Because machine learning is data-hungry, it can have a negative impact on data privacy. Companies avoid compliance risks when training machine learning algorithms on anonymized data instead of raw data. But since anonymization removes some of the informational value of the data, it can distort or completely destroy important correlations. However, introducing noise in the data can also be useful to avoid overfitting the models later on.

Examples

The paper “Privacy Preserving Machine Learning: Threats and Solutions” names common approaches for Privacy-preserving Machine Learning (PPML): Cryptographic methods like Homomorphic Encryption, Garbled Circuits, Secret Sharing and Secure Processors and ultimately the generation of synthetic data.

One of the biggest advantages of synthetic data is that it’s not limited to structured data like text files, but it can also be audio, video or image data. Forbes named a few applications in the article “Does Synthetic Data Hold The Secret To Artificial Intelligence”:

- Healthcare: Simulated X-rays combined with actual X-rays to train AI algorithms to identify conditions

- Finance: Fraudulent activity detection systems can be tested and trained without exposing personal financial records

- Transportation: Algorithms for autonomous driving analyse synthetic video data instead of actual camera data

As you can see, there are many of possibilities here as well. At Aircloak, we are also currently experimenting with anonymized synthetic data and will soon be able to provide more interesting information.

Approaches To Consider

- Synthetic Data

Reporting Dashboards

Challenge

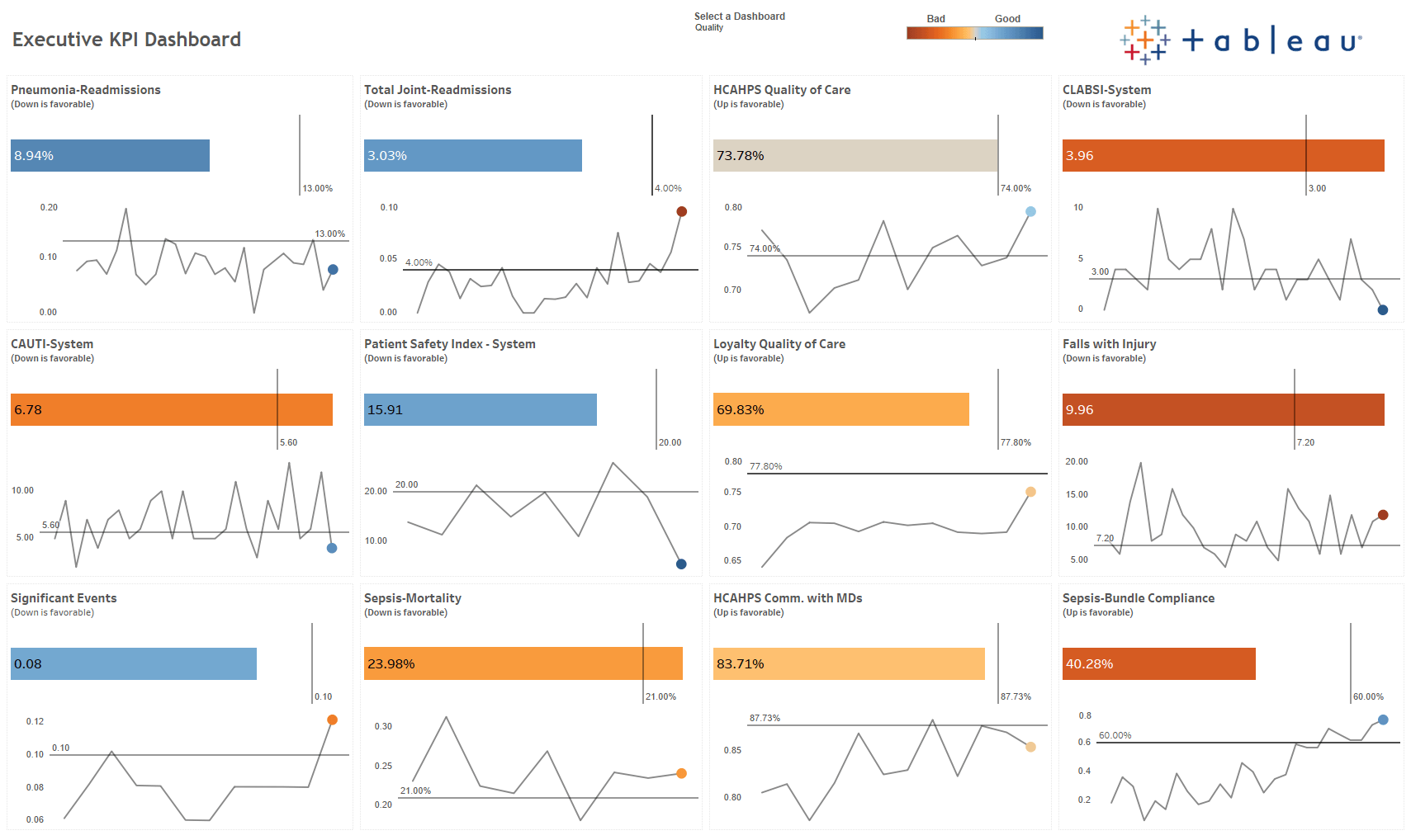

Business intelligence and data analysis is extremely important at all levels in an organization. In the past, decisions used to be made intuitively or based on assumptions, today management has access to a vast amount of business and market data. The recent acquisition of Tableau shows how relevant even simple business intelligence still is today. With the help of anonymization, reporting dashboards can be improved by including anonymized sensitive data and can provide even more information.

Examples

Modern, dynamic anonymization tools, such as Aircloak Insights, connect business intelligence tools like Tableau directly to critical databases, for example using the Postgres Message Protocol. The raw data is then queried via an anonymizing interface. The results are insights that would otherwise not have been accessible for data protection reasons.

Approaches To Consider

- Dynamic Data Anonymization

Data Retention

Challenge

Data minimization is one of the core principles of GDPR (§5) and it has strict data retention policies. However, once personal data has been anonymized, no restriction on retention periods apply any more. In December 2018, the Austrian Data Protection Authority clarified that the erasure of personal data is also possible through anonymization.

Examples

According to their privacy policies, a rising number of companies are using anonymization to store search log data, for example Yahoo and Google. The blog article ‘Data Retention Policies Demystified’ goes more into detail about this topic.

Approaches To Consider

- Static Data Anonymization

Creation of Test Data Sets

Challenge

Customer data helps DevOps teams to test software and ensure quality. But copying live personal data into testing environments without consent can represent a data privacy violation under GDPR. In any case, you need to tick off one of the points from Article 6 GDPR ‘Lawfulness of processing’ in order to use data in testing, development or quality assurance environments.

Examples

Because test data usually needs similar properties to the original data, synthetic data can be a suitable substitute without risking the privacy of users. But privacy doesn’t come for free and generating synthetic data is not as easy as it sounds.

There are certain conditions that apply for anonymization in test data. As an example, let’s take a vendor for HR Software, which has outsourced their QA to a development studio abroad. In order to be able to test the software under real life conditions, the testers need to have access to data sets that are pretty similar to the original data. Synthetic data sets of high quality preserve the correlations of the raw data and also map outliers as well as edge cases. If an employee has changed his name several times or has a new nationality, you really need to know how the software reacts to this.

Approaches To Consider

- Synthetic Data

Data Streaming

Challenge

Anonymization of single events, often coming from a single client, is a much trickier problem to solve than anonymization of aggregate data. Yet exactly such approaches are vital for certain use cases such as connected cars.

Examples

One of the more popular examples of anonymization at the edge of a network comes from Apple, who announced in 2016 they’d start to use Differential Privacy to analyze users’ emoji- and keyboard use. Their specific implementation came under fire quickly, but that does not mean it can’t be done. Randomized response algorithms are well-understood and easy to explain.

Approaches To Consider

- Streaming Anonymization

- Dynamic Anonymization

Where Anonymization Isn’t Possible

Choosing the correct anonymization solution for your project can be a daunting task. With the current lack of standardization or certificates, careful review of approaches before implementation is key. Sometimes, this also includes the insight that it might simply not be possible to anonymize data for your use case.

Anonymization is a privilege, and if a given dataset cannot be confidently anonymized for an analytic task, then that data should be regarded as pseudonymous and personal, and protected accordingly.

For example, to comply with the opinion on anonymization techniques that the European Data Protection Board (formerly known as Article 29 Working Party) laid out, one can argue that audiovisual files and free text can not truly be anonymized. Of course, that doesn’t mean that they can’t be adequately protected.

Providers of data anonymization solutions

Below you can find a non-exhaustive list of anonymization tools and providers. We haven’t tested them all, but we hope that the list helps you in your further research for an anonymization solution. You can also find a list of vendors with innovative solutions in this B2B Privacy Tech Landscape.

If you are interested in benchmarking and comparing anonymization solutions, you should have a look at the Open General Data Anonymity Score Project, initiated by the Max Planck Institute for Software Systems.

|

Dynamic Anonymization |

Tools |

Google’s RAPPOR |

|

Vendors |

||

|

Static Anonymization |

Tools |

|

|

Vendors |

||

|

Synthetic Data |

Vendors |

Statice |

|

Streaming Anonymization |

Vendors |

Do you want to know more?

Now we would like to hear from you: What kind of anonymization challenges do you face in your company? Which solutions are you using? Maybe you think that Aircloak can help you, but you are not 100% sure? No problem – schedule a demo with us and we can figure out if we are the perfect fit for your project. We are keen to hear from you – write us at solutions@aircloak.com.

Categorised in: Anonymization, GDPR, General, Privacy, Synthetic Data

Dynamic Anonymization GDPR HIPAA Static Anonymization Synthetic Data Use Cases